The wide availability of selfies on social media has helped fuel artificial intelligence (AI)-powered scams.

Speaking to Bloomberg News on Friday (Feb. 28), Center for Strategic & International Studies researcher Julia Dickson said cybercriminals are stealing profile pictures and personally identifiable information or purchasing it from underground marketplaces.

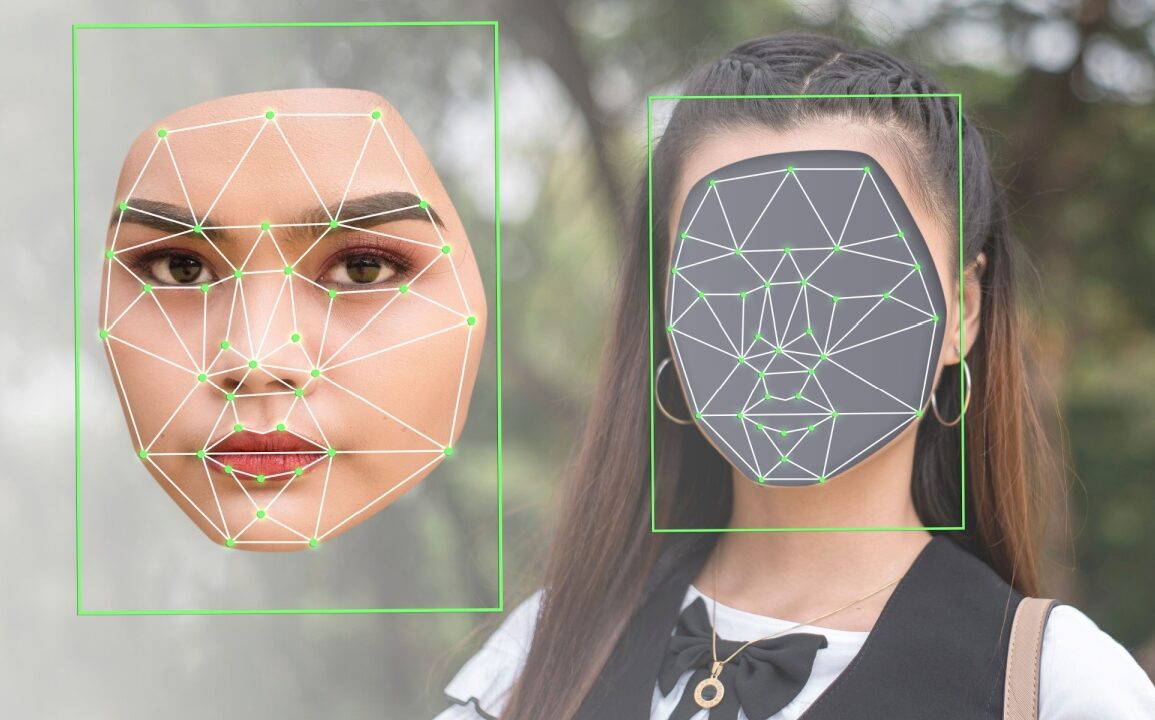

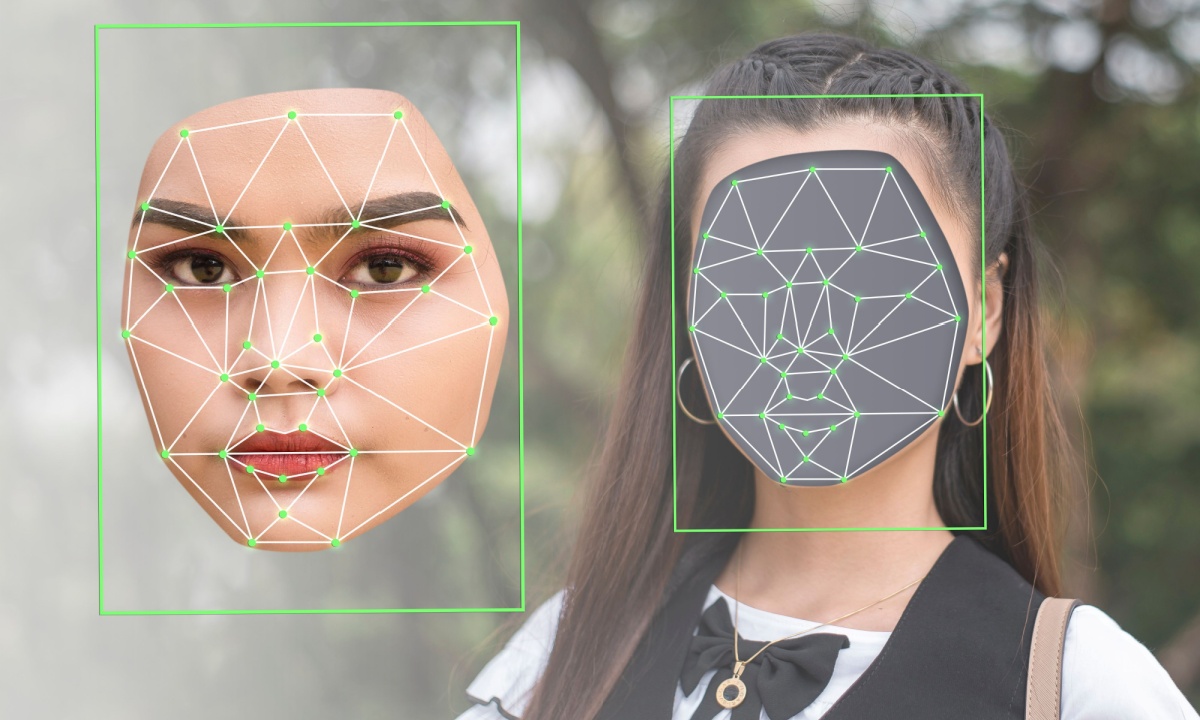

From there, scammers use the data to craft deepfakes that let them get around digital verification systems and know-your-customer (KYC) measures.

Bloomberg also cites a report from identity verification Socure showing the ease at which scammers can pull a selfie from a person’s social media profiles, then use it with stolen personally identifiable information to create a phony ID.

Scammers can also take headshots and create a full photo of a person, using AI to create natural-looking backgrounds, thus letting them bypass security measures that require users to take a live picture of themselves.

In addition, the report said, scammers can create realistic images of fake people, asking generative AI (GenAI) image generators to create a selfie based on physical descriptions.

Deepanker Saxena, head of document verification products at Socure, told Bloomberg scammers can also take old photos of people and use AI to age them to match their appearance in the present day.

PYMNTS examined these sorts of threats last month in an interview with Mzukisi Rusi, Entersekt’s vice president for product development: authentication products.

“Every new technology brings new risks,” said Rusi.

As that report noted, most people “live their lives on their phones, which have been a conduit for one-time passwords.”

But if an attacker can trick a mobile carrier into thinking that a legitimate customer wants a new number (or they’ve lost their phone or want a new SIM card), those one-time passwords can also be compromised.

In other cases, fraudsters “push bomb” their victims with push notifications that eventually leave people tired or confused, to the point that they give in, click on a link and end up at the mercy of a scammer.

“It’s incumbent” on financial institutions “to stay one step ahead and constantly evolve their defenses,” Rusi said.

The threat has gotten the attention of law enforcement. Last year, the Treasury Department’s Financial Crimes Enforcement Network (FinCEN) issued an alert to help banks spot scams associated with the use of deepfake media created using GenAI.

“While GenAI holds tremendous potential as a new technology, bad actors are seeking to exploit it to defraud American businesses and consumers, to include financial institutions and their customers,” said FinCEN Director Andrea Gacki. “Vigilance by financial institutions to the use of deepfakes, and reporting of related suspicious activity, will help safeguard the U.S. financial system and protect innocent Americans from the abuse of these tools.”