Abstract

In recent years, the spread of social networking services (SNS) has made it easier to connect with people who have similar opinions. Accordingly, similar opinions are shared within a group, while the frequency of exposure to different opinions tends to decrease. As a result, the polarization of opinion among groups is more likely to occur. Some studies have been conducted to identify the conditions under which opinion polarization occurs by simulating opinion dynamics, but specific methods for mitigating it have been poorly understood. Recently, it was found that even a few artificial perturbations inspired by the adversarial attack reverse the result in voter models, where a minority opinion becomes dominant through these perturbations. In this study, we conducted numerical simulations to determine whether it is possible to mitigate opinion polarization by adding such perturbations to the network in opinion dynamics models. The results show that opinion polarization can be mitigated by strategically generating perturbations to the weights of network links, and the effect increases as the perturbation strength parameter increases. Moreover, our analysis reveals that the effectiveness of this polarization mitigating method is enhanced in larger networks. Our results propose an effective way to prevent polarization of opinion in social networks.

Introduction

Social media, unlike conventional media, is a medium where information senders and receivers are connected in a network, characterized by the dissemination of information through interactions arising from interpersonal connections. The widespread adoption of social media in recent years has enabled individuals to rapidly acquire large volumes of information. Ideally, this allows users to access diverse perspectives and make more informed decisions1. However, although it has become easier to connect with people holding similar opinions and values, similar opinions are shared within groups, while opportunities to encounter different opinions have decreased. In such an environment, echo chamber phenomena and polarization of opinions among groups are more likely to occur2,3,4,5, potentially leading to the spread of misinformation2,6. These studies employ agent-based models and simulate the dynamics of opinion formation by varying parameters such as topic controversy, similarity, and social influence, revealing that these parameters significantly impact the final distribution of opinions7,8.

Furthermore, the structure of social networks also influences opinion polarization9. For example, selective reconnection, such as unfollowing users with differing opinions within a social media network, has been shown to accelerate opinion polarization10. Moreover, even when reconnection is based on the structural similarity of networks rather than opinion similarity, polarization is promoted11.

Although the understanding of the conditions leading to opinion polarization has progressed, methods to mitigate this polarization have not been sufficiently studied. Previous research suggests that controlling topic-related parameters or prohibiting network reconnection is necessary to alleviate polarization, but these interventions are not realistic.

On the other hand, adversarial attacks on opinion dynamics12,13,14 have the potential to help mitigate polarization. Inspired by adversarial attacks on deep neural networks (where small perturbations distort the neural network outputs), this approach involves introducing strategically generated small perturbations to the social network to drive opinion dynamics towards a target state. While the original research12,13 used this adversarial attack to distort opinion dynamics (e.g., reversing the vote outcome even when one opinion is in the majority), targeting a neutral opinion state could potentially mitigate opinion polarization. It is worth noting that in the field of deep neural networks, the beneficial use of adversarial attacks has been actively explored in recent years15,16.

Therefore, this study investigates the possibility of mitigating opinion polarization by introducing small artificial perturbations into social networks. Inspired by adversarial attacks, we propose a method to alleviate polarization in opinion dynamics models on social networks and verify its feasibility through numerical simulations.

Results

To explore this, we developed an agent-based model that applies perturbations inspired by adversarial attacks to the network in an opinion dynamics model. We conducted simulations to assess whether these perturbations can effectively mitigate opinion polarization. The specifics of the model are detailed in the Model section.

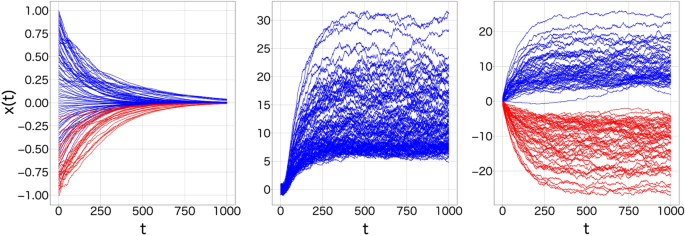

First, we present illustrative, intuition-building examples that show typical opinion distribution patterns for the case without polarization mitigation. When varying the parameters (alpha) and (beta), the dynamics can be classified into three major types, as shown in Fig. 1. This result is consistent with the findings of a previous study7.

In Fig. 1, the horizontal axis represents the number of time steps in the simulation, and the vertical axis represents the opinion variable. For the opinion transitions of (N=100) agents, opinions that are positive at the end of the simulation are shown in blue, while those that are negative are shown in red. Figure 1 (left) shows an example of a parameter set with small controversiality (alpha). In such a case, the opinions of agents “converge” to 0 (neutral) over time, leading to a consensus. Figure 1 (center) presents an example of a parameter set with small similarity (beta). In such a case, one side’s opinion is absorbed into the other’s opinion, amplifying it. As a result, “(one-sided) radicalization” occurs. Figure 1 (right) shows an example where both (alpha) and (beta) are large. In such a case, repeated contact with agents holding similar opinions leads to “polarization,” where the two opposing opinions are amplified, and fewer individuals maintain a neutral stance. We find that the final opinion distribution changes significantly depending on the values of (alpha) and (beta).

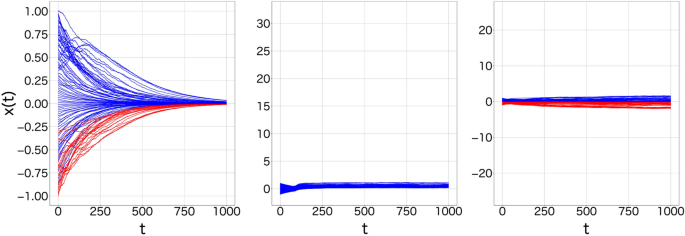

Next, we focus on the effect of perturbations described by Eq. (4) to the three parameter sets shown in Fig. 1. Here, we use (epsilon =0.1). The result is shown in Fig. 2. In Fig. 2, we observe that with polarization mitigation, the group opinion can be shifted without deviating significantly from the initial opinion distribution for all three parameter sets, even after many steps. This effect is likely due to the fact that mitigating polarization allows agents with different opinions to come into contact more frequently, thereby increasing the influence of different types of opinions.

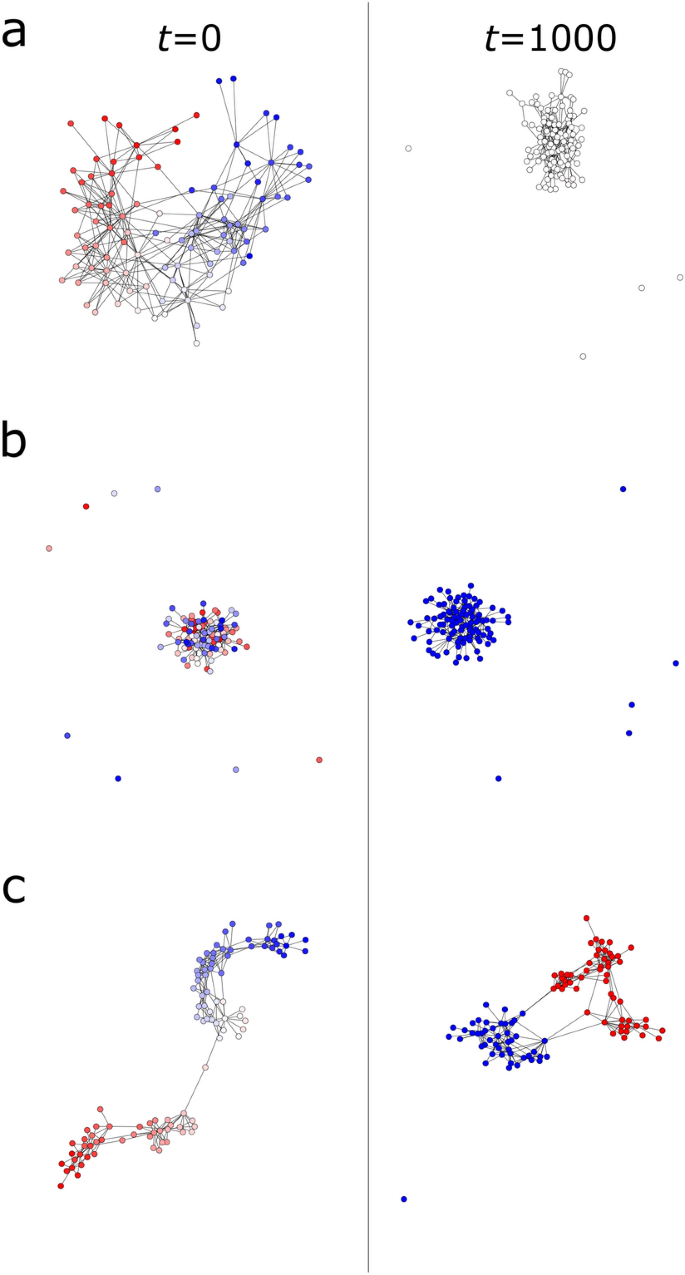

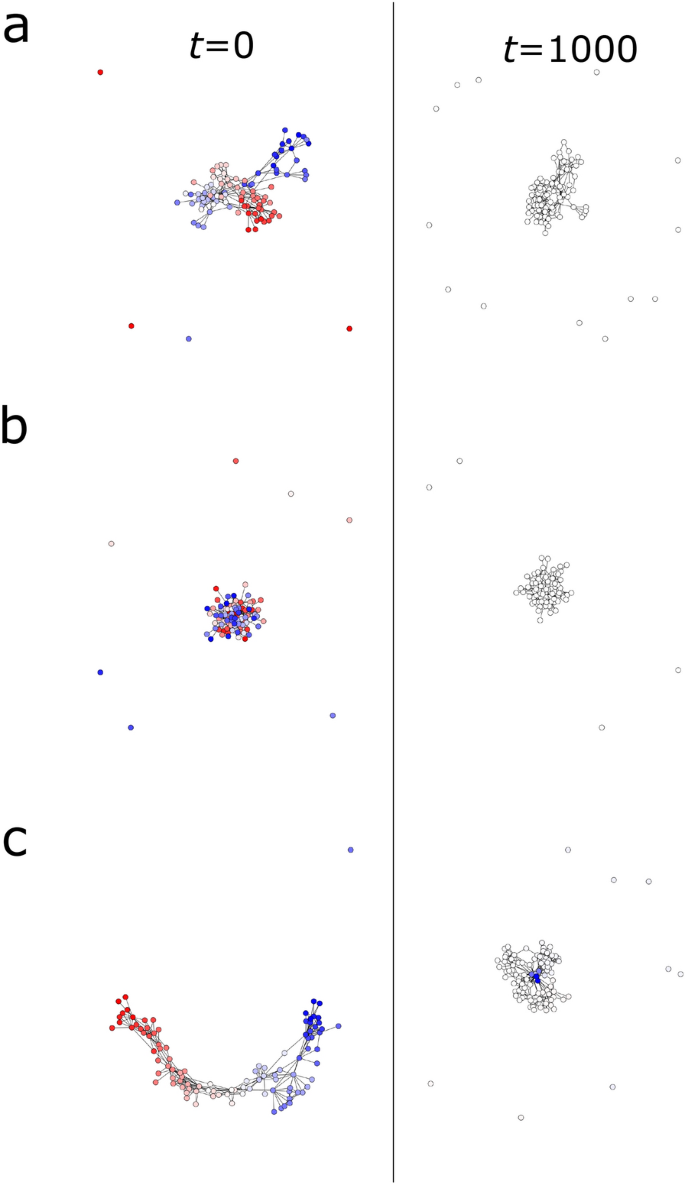

Next, we examine how network structures are affected with and without polarization mitigation. Figure 3 shows the networks of convergence, one-sided radicalization, and polarization, respectively, in the case without polarization mitigation. In each case, the left side shows the network at the beginning of the simulation, and the right side shows the network at the end. Agents with positive opinions are represented in blue, and those with negative opinions are represented in red, respectively. The color intensity increases with the absolute value of the opinion.

Figure 4 shows the networks for the case of polarization mitigation. The correspondence between the absolute value of the opinion and the color intensity for each parameter is the same as the case without mitigation. For the converging cases shown in both figures (a), the network structure remains similar without and with mitigation, as the opinions exhibit the same transitional behavior. For the one-sided radicalization cases shown in both figures (b), there is a large difference in the distribution of opinions at the end of the simulation between the cases with and without mitigation. However, both networks exhibit a similar structure, forming one large cluster of nodes with similar opinions and several isolated nodes. In the case of polarization with mitigation (Fig. 4 (c)), the network with mitigation exhibits a similar structure to the network in (a), where the opinions converge. In the case without mitigation (Fig. 3 (c)), although polarization has occurred, the network is not divided into multiple clusters. Instead, two types of opinion clusters are formed within one large cluster, with different opinion clusters connected to each other by a very small number of links.

To further illustrate the effectiveness of our intervention in mitigating polarization, we examine scenarios where the network starts in either an extreme opinion state or a highly polarized state. In these cases, we assess whether the intervention can successfully drive opinions back towards neutrality.

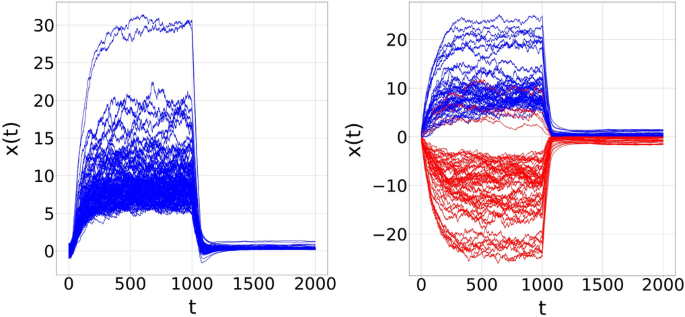

Figures 5 depict these scenarios using the same parameters for radicalization and polarization as those used in Figs. 1 and 2. In Fig. 5 (a), we show a scenario where one-sided radicalization has occurred. After the intervention is applied at (t=1000), opinions are driven back toward neutrality. In Fig. 5 (b), the system begins with two extreme opinion clusters, and the intervention is applied at (t=1000), after polarization has already occurred. In this case as well, the intervention succeeds in bringing opinions back to neutrality. Thus, both results demonstrate that the intervention effectively mitigates polarization, gradually driving opinions toward neutrality over time.

Depolarization by intervention for already-polarized networks. Left: One-sided radicalization, Right: two extreme opinion clusters. In both cases, the intervention at (t=1000) successfully drives opinions back toward neutrality. The same parameters as in Fig. 2, where radicalization or polarization occurred, were used: (N=100) and (epsilon =0.1).

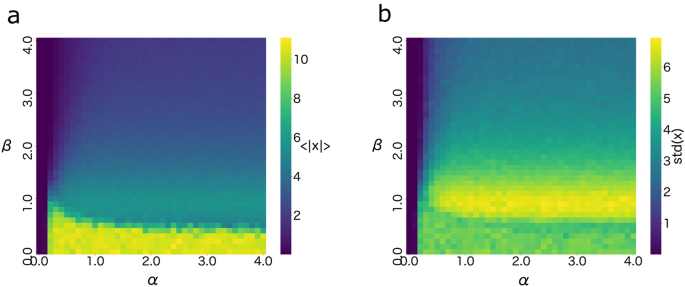

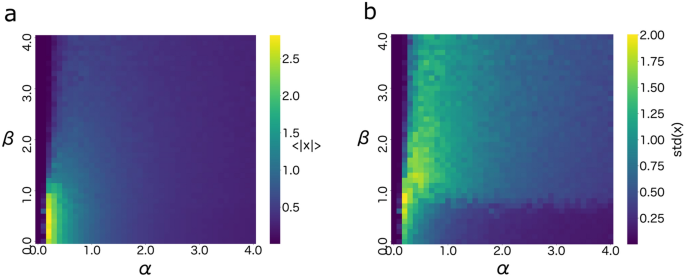

Following these examples, we perform a parameter sweep to explore the broader generality of the effect of polarization mitigation across a wider range of conditions. We focus on the mean absolute value and the standard deviation of the agents’ opinions at the end of the simulation to measure the effect. The mean absolute value measures the average extremity of opinions, capturing how far the opinions deviate from neutrality on average. The standard deviation captures the dispersion of opinions around the mean, providing insight into how opinions are spread or clustered. Together, these metrics provide a comprehensive assessment of opinion polarization. Lower values of these metrics indicate a lower degree of polarization and a higher effectiveness of the mitigation. Figures 6 and 7 are heatmaps showing the degree of polarization before and after polarization mitigation, respectively. These heatmaps illustrate how the degree of polarization changes when (alpha) and (beta) are varied, while the other parameters are kept the same as in the simulation in Fig. 1. The results are displayed for both before and after polarization mitigation. The horizontal axis represents (alpha) and the vertical axis represents (beta), both varying in 0.1 increments over the interval from 0.0 to 4.0. Figure 6 suggests that, in the case without mitigation, the region where (alpha) is extremely small ((alpha le 0.2)) has both a small mean absolute value and a small standard deviation, indicating that the opinions are converging. This behavior reflects a scenario where the issue’s controversy is minimal, allowing agents to readily align their opinions and form a consensus.

In the region where (beta) is small ((beta le 0.5)), the mean absolute value (langle |x| rangle) is highest and the standard deviation (textrm{std} (x)) is medium, indicating that one-sided radicalization occurs. In this case, the low sensitivity to opinion similarity leads agents to interact with others who hold different opinions. When (alpha) is moderately high, this dynamic results in agents being ultimately pulled toward either the positive or negative opinion, leading to a state of radicalization.

In the other regions, as (beta) increases, agents are more likely to interact with those holding similar opinions. This dynamic causes one group of agents to shift toward the positive direction and the other group toward the negative direction, resulting in polarization.

Figure 7 shows how the opinion dynamics change when polarization mitigation is introduced with (epsilon =0.1) as the control parameter. Compared to the cases without mitigation (Fig. 6), radicalization is significantly suppressed when (beta) is small (Fig. 7). Additionally, polarization is effectively suppressed even as (beta) increases.

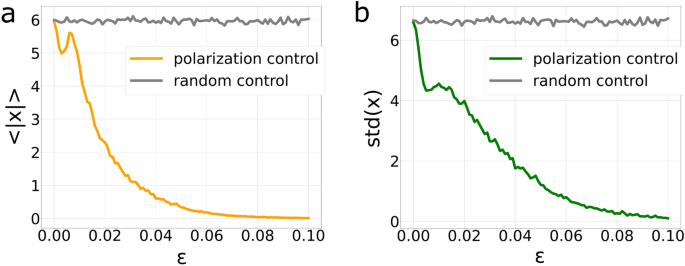

Next, we focus on the effect of the perturbation strength on polarization suppression. We vary (epsilon) while keeping ((alpha , beta , K) = (3, 3, 3)) fixed. Figure 8 shows the average of 100 simulations for (langle |x| rangle) and (textrm{std} (x)) when (epsilon) is set in the interval from 0 to 0.1 in increments of 0.01. The results show that both (langle |x| rangle) and (textrm{std} (x)) decrease rapidly with increasing (epsilon), and at (epsilon =0.08), (langle |x| rangle) of the opinions becomes nearly zero, indicating effective mitigation of polarization. This critical value of (epsilon) represents the threshold at which the intervention successfully drives opinions towards neutrality, counteracting the natural polarization tendency.

The perturbation introduced by (epsilon) modifies the weights of the network links, effectively weakening the connections between agents holding similar opinions and strengthening those between agents with differing opinions. This dynamic disrupts the formation of isolated opinion clusters, which are the primary drivers of polarization.

Below the threshold (epsilon =0.08), in the small range (epsilon in [0, 0.01]), we observe a temporary increase in (langle |x| rangle). A possible explanation is that in this small range, some agents with initially positive opinions may overshoot and shift to negative, while others with negative opinions may overshoot and shift to positive. This overshooting destabilizes the opinions of other connected agents, potentially increasing (langle |x| rangle). Further details and supporting figures are provided in the Supplementary Information (Fig. SI).

On the other hand, in the case of random control, both measures remain almost the same regardless of the value of (epsilon). Therefore, we can conclude that the rapid decrease in the measures is due to the effectiveness of the polarization mitigation.

Dependence of (epsilon) on both polarization measures (a) (langle |x| rangle) and (b) (textrm{std}(x)). The simulations were conducted with (N=100) over 1000 time steps. Parameter values were set as (alpha =3), (beta =3), and (K=3). Each data point represents an average over 100 independent realizations.

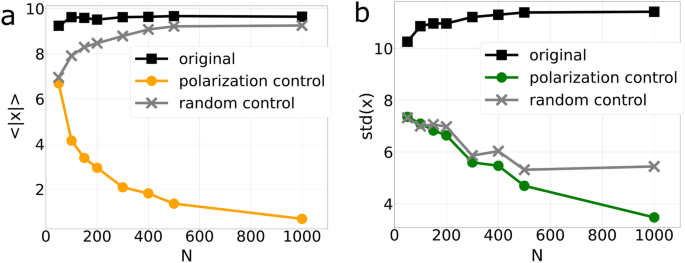

Finally, we present the results of how changes in N in the network affect the effectiveness of polarization mitigation when ((alpha , beta , K) = (3, 3, 3)) and (epsilon =0.01) are fixed. Figure 9 shows the average of 100 simulations for (langle |x| rangle) and (textrm{std} (x)) when N is varied to (N=50, 100, 150, 200, 300, 400, 500, 1000). The black lines represent the values of the original model without mitigation, the gray lines represent the values with random mitigation, and the yellow and green lines represent the values with polarization mitigation, respectively. This shows that in the original model, there is almost no difference in the degree of polarization, even as N changes. In contrast, when polarization mitigation is added, polarization can be increasingly suppressed as N increases. Therefore, we find that the polarization mitigation becomes more effective as the network size increases.

Dependence of N on both polarization measures (a) (langle |x| rangle) and (b) (textrm{std}(x)). The simulations were conducted with (N=100) over 1000 time steps. Parameter values were set as (alpha =3), (beta =3), and (K=3). The perturbation strength was fixed at (epsilon =0.01). Each data point represents an average over 100 independent realizations.

Discussion

In this study, we introduced perturbation based on adversarial attacks to prevent opinion polarization in simulated networked populations. The results showed that the effect increases with an increase in (epsilon), which adjusts the perturbation strength. We also found that if (epsilon) is equal to or larger than 0.08, the mean absolute value of opinions reduces to nearly zero for any settings of (alpha) and (beta). Moreover, the larger the network size N, the more effectively polarization was suppressed.

As shown in Fig. 8, even a small amount of perturbation ((epsilon)) can effectively reduce polarization, suggesting that continuous perturbations could sustain this mitigation over time. Moreover, as demonstrated in Fig. 5, the proposed perturbation method remains effective regardless of when the intervention is introduced. This robustness indicates that the perturbation mechanism has the potential to influence the network dynamics over the long term by continuously counteracting the natural tendency of the network toward polarization.

One of this study’s significant contributions is that the opinion control method, previously used only in voter models, is applied to another opinion dynamics model. While previous voter models have aimed to invert the voting results of a group, the purpose of this study is to suppress opinion polarization within the group. Other major differences include that the opinion variables change continuously and the network structure evolves over time.

The results of this study show that slight manipulations of the weights of social network links can significantly alter the distribution of opinions in a population. These results highlight the capacity of our intervention to depolarize even networks that have already experienced significant opinion divergence, reinforcing the practical effectiveness of this method. However, larger values of (epsilon) might overly dampen opinion diversity, resulting in an undesirable over-convergence towards neutrality in scenarios where a diversity of opinions is important. Therefore, (epsilon) should be balanced to effectively mitigate polarization without eliminating diversity.

While traditional approaches like the “balanced diet” algorithm17 or cross-cutting communication18 require active and substantial modifications to the system, our method achieves polarization mitigation through minimal perturbations to the network structure.

In this context, comparing our proposed method with random control is sufficient to demonstrate its effectiveness, as our primary goal was to show that even small, carefully designed perturbations can effectively reduce polarization. This “minimal intervention” approach could be particularly valuable in real-world applications where large-scale modifications to social networks might be impractical or undesirable.

In real-world SNS, link weights can be interpreted as the frequency of contact between users. Although real-world social networks have more complex dynamics and structures, which necessitates caution when directly applying our method, the proposed approach provides a fundamental framework for mitigating polarization by introducing small changes to the network structure. Therefore, service providers may be able to control the opinion dynamics of a population by making small changes to individual users and their display frequency. Our results also show that the proposed mitigation method is more effective for larger networks, consistent with findings from previous voter model studies. At present, the underlying factor for this observation is unknown. It is necessary to analytically understand the relationship with N in the future, as shown in14.

In addition, our model might be perceived as merely supporting the simple contact hypothesis, which suggests that increased contact with opposing opinions should reduce polarization. While Pettigrew et al. (2011)19 and Paluck et al. (2019)20 focused on prejudice reduction through intergroup contact, our study extends this line of inquiry by examining how contact between individuals with differing opinions might mitigate opinion polarization. Although distinct, both phenomena explore how increased exposure to different perspectives can potentially reduce social divisions, whether in the form of prejudice or opinion polarization.

We acknowledge that contextual factors significantly influence whether exposure to opposing views reduces polarization. In some cases, these factors might even exacerbate polarization due to the backfire effect21. It is necessary to address these nuances and emphasize the importance of considering such factors in future research.

Future research could explore how specific contextual factors, such as the strength of pre-existing beliefs, the nature of the communication medium, or the social identity of the individuals involved, modify the impact of increased contact in our model. This could lead to more nuanced predictions about when and why interventions to reduce polarization are likely to be effective in real-world settings.

Model

In this study, we consider an opinion dynamics model in social networks7. In this model, a group composed of multiple agents forms a network. Each agent possesses an opinion as an internal state and modifies its opinion under the social influence of surrounding agents within the network. By modeling these changes, we observe how group opinions and network structures change over time. Furthermore, after identifying the parameter values that lead to opinion polarization, we aim to mitigate this by introducing perturbations through adversarial attacks.

We chose this model because it provides a well-established framework that effectively captures the key dynamics of opinion polarization. Compared to other models, such as the bounded confidence model, this approach allows for a more flexible representation of social influence and maintains analytical tractability, making it particularly suitable for our study.

Model settings

We consider a network composed of N agents. For this model, we assume a binary issue of choice, where the stance of agent i on the issue at time t is represented by a one-dimensional opinion variable (x_i(t) in (-infty , +infty )). (x_i) takes a positive value if agent i supports the issue and a negative value if the agent opposes it. The absolute value of (x_i) indicates the extremity of agent i’s opinion. In reality, opinions cannot reach infinite extremes; however, we used the concept of infinity in our model to define a theoretically unbounded range of opinions. When we refer to an opinion as large, we describe the magnitude of its deviation from the neutral state (x=0), whether in the positive or negative direction.

Opinion update

Each agent in the network changes its opinion over time due to social influences. The dynamics of opinions are described by a system of N ordinary differential equations, as shown below.

(K (>0)) represents the strength of social interaction among agents. (alpha (>0)) represents the degree of controversy of the issue and controls the shape of the sigmoidal influence function (tanh). The sigmoidal influence function (tanh (x)) expresses the nonlinear effect that an agent’s opinion has on the opinions of surrounding agents. As (alpha) increases, the function becomes more sensitive to opinions that are closer to neutral.

(A_{ij}(t)) is an element of the adjacency matrix of the network at time t. The second term on the right-hand side of the equation represents the change in opinion due to the influence of the opinions of surrounding agents. In general, the magnitude of influence increases with the strength of the opinion. However, the (tanh) function constrains opinion values, ensuring that extreme opinions are limited within practical bounds, especially when they deviate significantly from neutrality. The model does not account for the preferences of individual agents. For agents who do not receive social interaction, their opinions decay toward neutrality over time due to the first term on the right-hand side of the equation. At each time step of the simulation, the opinions are updated by numerically solving this differential equation for each agent.

Network update

We consider a time-varying social network where agents form connections by linking to other agents at each time step. In this network, links represent interactions between agents. At each time step, agents are activated to construct the network. Each agent is assigned an activity (a_i) based on the following power distribution, where the activity represents the probability of being activated at each time step.

The parameter (a_i) is constant for each agent throughout the simulation. The parameter (gamma) and (delta) control the shape of the power function. In our simulations, we set (gamma =2.1) and (delta =0.01), following established settings from in a previous study on opinion polarization7. The parameter (delta) is used to control the minimum activity level that each agent can have, ensuring that no agent’s activity falls below a certain threshold7. This helps to model a more realistic scenario where all agents have at least some level of engagement in social interactions. Similarly, (gamma) is employed to control the distribution of activity among agents7. Lower values of (gamma) lead to a more uniform distribution of activity, where all agents have similar levels of engagement. In contrast, higher values of (gamma) result in a more unequal distribution, where a few agents are highly active and more likely to connect with others. A network is constructed by activated agents forming links with other agents at each time step. The probability (p_{ij}) of forming a link from agent i to agent j is expressed as:

where (beta) represents sensitivity to similarity. The parameter (beta) controls the power-law decay of the connection probability based on opinion distance7, which reflects how sensitive agents are to forming connections based on opinion similarity. The larger the value of (beta), the higher the probability that agents with similar opinions will form a link. We also assume a parameter r that represents reciprocity. If a link is created from agent i to j, a link will be created from j to i with probability r. At each time step, the network is updated by creating links from all active agents towards an average of m agents, respectively, according to Eq. (3).

Polarization mitigation

Based on the adversarial attack method in the voter models12,13, we introduce a polarization mitigation method aimed at suppressing opinion polarization within a group. When approximations are applied (see the Supplementary Information S1), the polarization mitigation is ultimately expressed as a perturbation to the network, as:

where (A_{ij}) and (A_{ij}^{*}) represent the weighted adjacency matrices of the network before and after adding perturbation, respectively. (epsilon) controls the strength of the perturbation. Therefore, this method introduces perturbations by making slight changes to the weights of the links at each time step. Equation (4) is simplified by approximation and represents a control strategy intended to weaken the weight of the link from agent j to agent i if the opinion of the neighboring agent j agrees with that of agent i, and to strengthen it otherwise.

To evaluate the performance of the polarization mitigation, a random control strategy was implemented as a comparison. The perturbation introduced by random control is expressed as:

where s is a uniform random number sampled from the set ({-1, +1}).

Simulation settings

The simulations are performed for a total of 1000 timesteps with the number of agents fixed at (N=100) except for a few scenarios. We set the reciprocity parameter (r=0.5), following the original study7, which demonstrated that the results are robust with respect to variations in r. At each timestep, agents are activated, the network is updated, polarization mitigation is applied, and opinions are updated. Opinions are updated by numerical computation of Eq. (1) using the Euler method. The initial opinions of each agent are set to be uniformly distributed in (x_i(t)in [-1, +1]). We initially choose a uniform distribution of initial opinions because it provides a neutral starting point for observing the natural evolution of opinion dynamics. This prevents predisposing the system to any particular outcome.

To assess the sensitivity of the system, we also vary the initial conditions. Specifically, we examine how the system responds to variations in the parameters (alpha , beta), the initial network structure, (epsilon), and N.

Data availability

The datasets generated and analyzed during the current study are available in the Dryad repository: https://doi.org/10.5061/dryad.jq2bvq8jb.

References

-

Barberá, P., Jost, J. T., Nagler, J., Tucker, J. A. & Bonneau, R. Tweeting from left to right: Is online political communication more than an echo chamber?. Psychol. Sci. 26, 1531–1542 (2015).

-

Del Vicario, M. et al. The spreading of misinformation online. Proc. Natl. Acad. Sci. U.S.A. 113, 554–559 (2016).

-

Lelkes, Y., Sood, G. & Iyengar, S. The hostile audience: The effect of access to broadband internet on partisan affect. Am. J. Political Sci. 61, 5–20 (2017).

-

Allcott, H., Braghieri, L., Eichmeyer, S. & Gentzkow, M. The welfare effects of social media. Am. Econ. Rev. 110, 629–676 (2020).

-

Garimella, K., De Francisci Morales, G., Gionis, A. & Mathioudakis, M. Political discourse on social media: Echo chambers, gatekeepers, and the price of bipartisanship. Proceedings of the 2018 World Wide Web Conference 18, 913–922 (2018).

-

Lazer, D. M. J. et al. The science of fake news. Science 359, 1094–1096 (2018).

-

Baumann, F., Lorenz-Spreen, P., Sokolov, I. M. & Starnini, M. Modeling echo chambers and polarization dynamics in social networks. Phys. Rev. Lett. 124, 048301 (2020).

-

Liu, J., Huang, S., Aden, N. M., Johnson, N. F. & Song, C. Emergence of polarization in coevolving networks. Phys. Rev. Lett. 130, 037401 (2023).

-

Vosoughi, S., Roy, D. & Aral, S. The spread of true and false news online. Science 359, 1146–1151 (2018).

-

Sasahara, K. et al. Social influence and unfollowing accelerate the emergence of echo chambers. J. Comput. Soc. Sci. 4, 381–402 (2021).

-

Santos, F. P., Lelkes, Y. & Levin, S. A. Link recommendation algorithms and dynamics of polarization in online social networks. Proc. Natl. Acad. Sci. U.S.A. 118, e2102141118 (2021).

-

Chiyomaru, K. & Takemoto, K. Adversarial attacks on voter model dynamics in complex networks. Phys. Rev. E 106, 014301 (2022).

-

Chiyomaru, K. & Takemoto, K. Mitigation of adversarial attacks on voter model dynamics by network heterogeneity. J. Phys. Complex. 4, 025009 (2023).

-

Mizutaka, S. Crossover phenomenon in adversarial attacks on voter model. J. Phys. Complex. 4, 035009 (2023).

-

Ruiz, N., Bargal, S. A. & Sclaroff, S. Disrupting deepfakes: Adversarial attacks against conditional image translation networks and facial manipulation systems. arXiv:2003.01279, (2020).

-

Liang, C. & Wu, X. Mist: Towards improved adversarial examples for diffusion models. arXiv:2305.12683, (2023).

-

Garimella, K., Morales, G.D.F., Gionis, A. & Mathioudakis, M. Balancing opposing views to reduce controversy. In Proceedings ofthe 10th ACM International Conference on Web Search and Data Mining. ACM, (2017).

-

Balietti, S., Getoor, L., Goldstein, D. G. & Watts, D. J. Reducing opinion polarization: effects of exposure to similar people with differing political views. Proc. Natl. Acad. Sci. U.S.A. 118, e2112552118 (2021).

-

Pettigrew, T. F., Tropp, L. R., Wagner, U. & Christ, O. Recent advances in intergroup contact theory. Int. J. Intercult. Relat. 35, 271–280 (2011).

-

Paluck, E. L., Green, S. A. & Green, D. P. The contact hypothesis re-evaluated. Behav. Public Policy 3, 129–158 (2019).

-

Bail, C. A. et al. Exposure to opposing views on social media can increase political polarization. Proc. Natl. Acad. Sci. U. S. A. 115, 9216–9221 (2018).

Author information

Authors and Affiliations

Contributions

MN and GI designed the research; MN and GI performed the simulations; MN and KT performed the mathematical analysis, and GI verified the analysis. All authors discussed the results. MN, GI, and KT wrote the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Ninomiya, M., Ichinose, G., Chiyomaru, K. et al. Mitigating opinion polarization in social networks using adversarial attacks.

Sci Rep 15, 9033 (2025). https://doi.org/10.1038/s41598-025-93213-z

-

Received:

-

Accepted:

-

Published:

-

DOI: https://doi.org/10.1038/s41598-025-93213-z

This post was originally published on this site be sure to check out more of their content